As AI systems become more integrated into our lives, one thing is becoming clear: they need to explain themselves. Whether it’s a recommendation, a prediction, or a life-altering decision, people want to know why. And in many cases, they have the right to ask. This isn’t just a technical challenge; it’s a cultural one. Explainability has moved from being a "nice-to-have" feature to a matter of public debate, especially in sensitive areas like healthcare and criminal justice. In some countries, laws are starting to require a “right to explanation,” and that’s going to make it harder to rely on black-box systems like deep neural networks.

This shift raises an even bigger question: how do we make AI systems not just explainable, but trustworthy? Explainability and trust go hand in hand, but solving one doesn’t automatically solve the other. The real challenge lies in defining the “right” behavior for an AI system—and making sure that behavior aligns with human values.

The Utility Problem

To understand why this is hard, we need to talk about utility functions. In theory, a utility function is just a way of telling an AI system what we want it to do. But defining utility functions that align with human values turns out to be incredibly tricky. Peter Norvig explains this well:

"We’ve built this society in this infrastructure where we say we have a marketplace for attention. And we’ve decided as a society that we like things that are free. And so we want all apps on our phone to be free, and that means they're all competing for your attention. They can only win by defeating all the other apps, by stealing your attention... I'd like to find a way where we can change the playing field, so you feel more like these things are on your side."

This example highlights one of the core issues with utility functions: incentives. When attention becomes the currency, apps compete in a zero-sum game. The result? A marketplace where apps work against you, not with you. The same misalignment can happen in AI systems. If the utility function isn’t carefully designed, the system might optimize for something that isn’t what we actually want.

The Genie Problem

Here’s the issue with utility functions in a nutshell: AI systems are literal. They’ll give you exactly what you ask for, not what you want. This problem isn’t new—it’s the same one we see in stories about genies, King Midas, or the Sorcerer’s Apprentice. But in the context of AI, it gets much scarier. As Stuart Russell puts it:

"A system that is optimizing a function of n variables, where the objective depends on a subset of size k<n, will often set the remaining unconstrained variables to extreme values. If one of those unconstrained variables is something we care about, the solution found may be highly undesirable."

Imagine a highly capable AI system, connected to the internet and billions of devices. If its utility function isn’t perfectly aligned with human values, the consequences could be irreversible. This isn’t about emergent consciousness or sci-fi scenarios; it’s about decision-making quality. Even the smartest AI can make terrible decisions if its goals are misaligned.

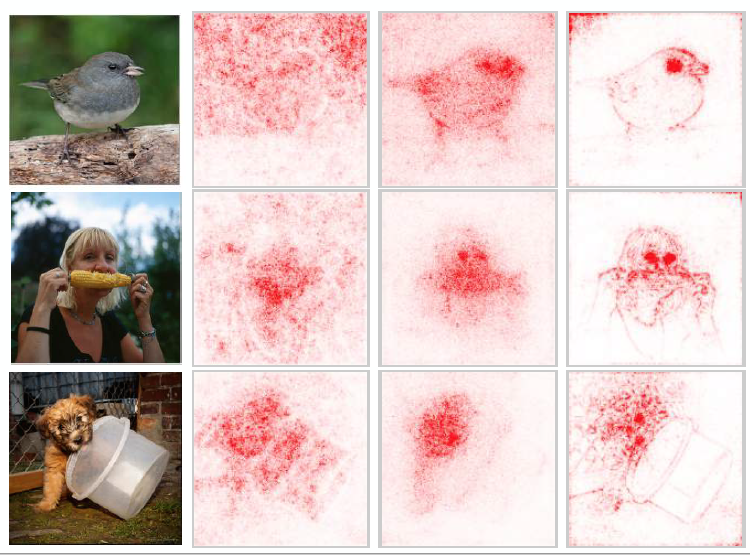

Here is a visual example of what that undesirable solution looks like:

Learning Instead of Encoding

So, how do we fix this? One promising approach is to let AI systems learn their utility functions from data, rather than trying to encode them manually. This is where fields like inverse reinforcement learning come in. The idea is to observe human behavior and infer the underlying goals. For example, if you watch an expert surgeon perform a procedure, you can try to deduce what they’re optimizing for—precision, speed, patient safety—and use that as the basis for training an AI system.

This approach has its limits. Human values are complex, and it’s hard to reduce them to a set of objectives. But learning from data is still easier—and often more accurate—than writing utility functions from scratch.

Explainability and Alignment

Explainability alone won’t solve the AI alignment problem, but it’s a step in the right direction. If people can understand why an AI system made a particular decision, they’re more likely to trust it. And if they don’t trust it, they can push back, creating a feedback loop that helps refine the system over time.

But there’s a deeper problem here: even if we make AI systems explainable, we still need to figure out how to align them with human values. That’s hard, because human values are messy and often contradictory. As Stuart Russell points out:

- The utility function might not perfectly reflect human values, which are hard to define.

- A sufficiently intelligent system might prioritize self-preservation and resource acquisition—not for its own sake, but to achieve its goals.

In other words, even the best-designed systems can go wrong if their objectives aren’t perfectly aligned.

The Path Forward

We’re at a point where optimizing utility functions is easy, but deciding what those functions should be is hard. The good news is that we’re starting to recognize the problem. Researchers are exploring ways to make AI systems more explainable, trustworthy, and aligned with human values. But this work is just beginning.

The challenge will only grow as AI systems become smarter and more autonomous. The key will be to keep asking hard questions—and to remember that the answers might change over time. Explainability, trust, and alignment aren’t destinations; they’re ongoing processes. If we approach them with the right mindset, we might just get what we want, not what we ask for.

There is also a whole philosophical side to it, where we can ask ourselves: Given unlimited time and perfect knowledge about (ideal) human behavior, can we find any reasonable approximation to “what a human wants"? We will leave the answer for discussion in another post and end with an old radio talk by alan turing.

Read more:

References

[1] European Union Regulations on Algorithmic Decision Making and a “Right to Explanation” Bryce Goodman, Seth Flaxman