TensorFlow is google’s second-generation system for the implementation and deployment of large-scale machine learning models. It is flexible enough to be used both in research and production. Computations in TensorFlow are expressed as stateful dataflow graphs.

Essential Vocabulary:

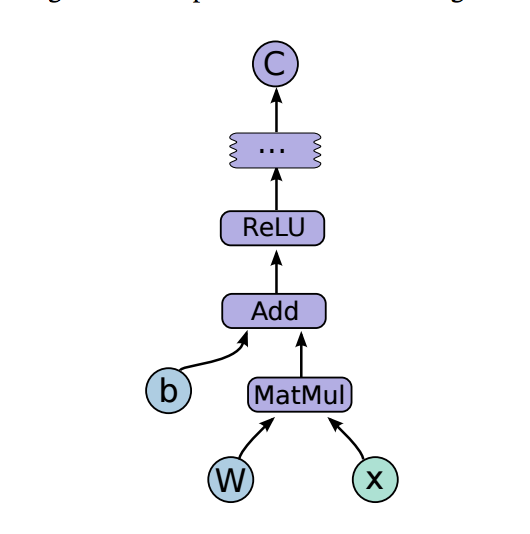

Basic computation model: Computations in tensor flow are represented by a directed graph which is composed of a set of nodes. Each node has zero or more inputs and zero or more outputs and represents the instantiation of an operation. Graphs are constructed using supported front-end langauges(C++/Python)

Control Dependency: They indicate that the source node must finish execution before the destination node begins execution.

Variables: A special kind of operation that returns a handle to a persistent mutable tensor that survives across executions of a graph.

Tensors: They are the values that flow along the normal edges of the graph. A typed multi-dimensional array(e.g 8–64 bit signed or unsigned integers, IEEE float and double, complex number or a string type, which can be an arbitrary

Session: A Session has to be created for executing the dataflow graph. The execution may usually involve providing a set of inputs/outputs in batches. It supports two main functions:

Extend — used to add additional nodes to the dataflow model

Run — Takes as argument a set of named nodes to be computed as well as an optional set of tensors to be used in place of certain node outputs. It then uses the graph to figure all node requires to compute the requested outputs, and performs them in a order that respects their dependenies.

Variables: A variable is a special kind of operation that returns a handle to a persistent mutable tensor that survives across executions of a graph.

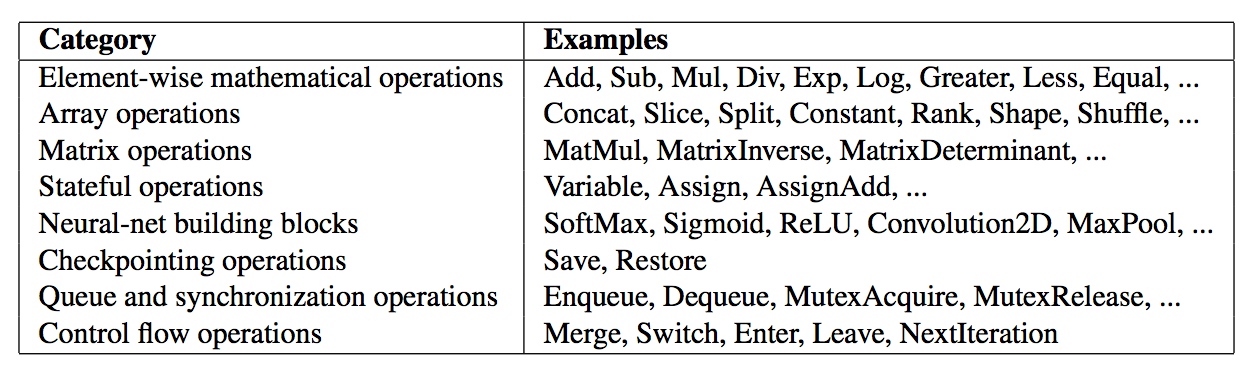

Operation: It represents computations such as those shown in the table below:

An operation can have attributes and they must be provided or inferred at graph-construction time.

Execution:

- Input: feed_dict parameter in the session.run method is used to feed the input data. TensorFlow also supports reading tensors in directly from files.

- When implementing a machine learning algorithms, we store the parameters of the model in tensors held in variables.

- If you consider the basic scenario where it is executed on a single-device then the nodes of the graph(representing the computation) are executed in an order that respects the dependencies between nodes.

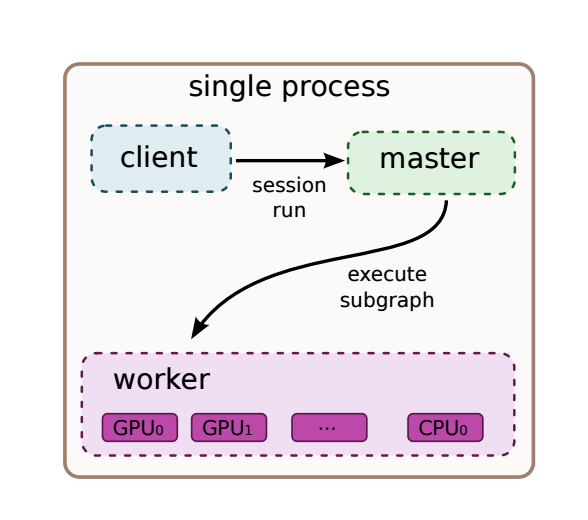

Implementation: TensorFlow System consists of client, master and worker processes. The client uses a session interface to communicate with the master and master schedules co-ordinates worker processes and relays results back to the client. Worker Processes are then responsible for maintaining access to devices such as CPU/GPU cores and execute graph nodes on their respective devices.

Gradient Computations: Gradient descent is one of the most popular algorithms to perform optimisation(usually involves minimising the cost function while training the ML model). Tensor-flow provides built-in support for gradient computations.

Control Flow(if-conditionals and While-loops): Usually explicit control flow is not needed, but tensor flow does provide a few operators. For example switch and merge operators can be used to skip the execution of the entire subgraph(based on value of a boolean tensor). The Enter,Leave and NextIteration operators allow us to express iteration.

Some Examples:

- Variables: They generally hold and update parameters used in training a ML model. Upon creation we pass a tensor as its initial value to the Variable() constructor and we have to specify the shape of the tensor.

Example:

state = tf.Variable(0, name="counter”) #declare(pass value and name)

update = tf.assign(state, new_value) #update

with tf.Session() as sess:

sess.run(tf.initialize_all_variables()) #initialize

print(sess.run(state)) #execute graph(or in this #case retrieve value)

This is usually done inside the session.run() command

2. Add/Multiply Operations with Constants:

input1 = tf.constant(3.0)

input2 = tf.constant(2.0)

input3 = tf.constant(5.0)

intermed = tf.add(input2,input3)

mul = tf.mul(input1,intermed)

with tf.Session() as sess:

result = sess.run([mul,intermed])

print(result)3. Inputting data(placeholders and Feed Dictionaries):

There are three methods of getting data into a TensorFlow program:

i. For small datasets we can pre-hold them in a constant or a variable

ii. Feeding: For example using feed_dict argument to a run() or eval() call

#placeholder variables are dummy nodes that provide entry points for data to computational graph

input1 = tf.placeholder(tf.float32) #pass type

input2 = tf.placeholder(tf.float32)

output = tf.mul(input1,input2)

feed = {input1:[7.],input2:[2.]}

#feed data into the computational graph and fetch output

with tf.Session() as sess:

print(sess.run([output],feed_dict=feed))

#Placeholders exist to serve as the target of feed.iii. Reading from files:

List of filenames can for example be read as:

[(“file%d” % i) for i in range(2)])The filename queue can then be passed to reader’s read method. Readers are selected based on the input file format .

4. Graph Execution: The computational graph expressed in Tensor flow has no numerical value until executed. We execute the graph by creating as session(which encapsulates the environment in which tensor objects are evaluated). A good way is to use the with statement.

with tf.session() as sess:

print(sess.run(c))

print(c.eval())where c is the dataflow graph we want to execute.

5. Saving the model:

tf.train.Saver object can be used to save and restore a model.

Example:

saver = tf.train.Saver()

...

save_path = saver.save(sess,"/temp/model.ckpt")

print("Model saved in file: %s" % save_path

...

.

saver.restore(sess,"/tmp/model.ckpt")

print("Model restored.")If you want more example of code, have a look at the following:

Hello World Program: https://www.tensorflow.org/versions/r0.7/tutorials/mnist/beginners/index.html

https://github.com/tflearn/tflearn/blob/master/tutorials/intro/quickstart.md