In this post we learn about the basics of process analytics. This is part 1 of a series of posts in which we explore various topics surrounding process analytics.

A business process is a “collection of related and structured activities or tasks” that focus on providing a certain service or achieving certain goals in the context of a business environment.

Business Process modelling is a process of representing information flow, decision logic and business activities occurring in a typical business process. It can also be seen as ordering of work activities across time and place with clearly defined input and outputs representing a structure for a set of actions occurring in a predefined sequence.

Process models Representation:

Process models are widely used in organizations to document internal procedures that help accomplish business while at the same time they assist organizations in managing complexity and providing new insights. There exist several notations for representing the business processes ranging from simple text documents to Petri-nets and Business Process Model and Notation(BPMN). The exercise of modelling business processes using these notations helps highlight the core parts the process while abstracting away the less important details of activities happening inside an organization. Each of the different notations have their own strengths and weaknesses. Notations such as Petri-Net allow us analyze verify and validate different process properties using formal methods, whereas declarative languages are useful in describing various constrains, temporal aspects and costs associated with various decisions and tasks occurring inside a process.

Five Common Use Cases for Process Mining according to gartner[1]:

- Improving Processes by Algorithmic Process Discovery and Analysis

- Improving Auditing and Compliance by Algorithmic Process Comparison, Analysis and Validation

- Improving Process Automation by Discovering and Validating Automation Opportunities

- Supporting Digital Transformation by Linking Strategy to Operations

- Improving IT Operations Resource Optimization by Algorithmic IT Process Discovery and Analysis

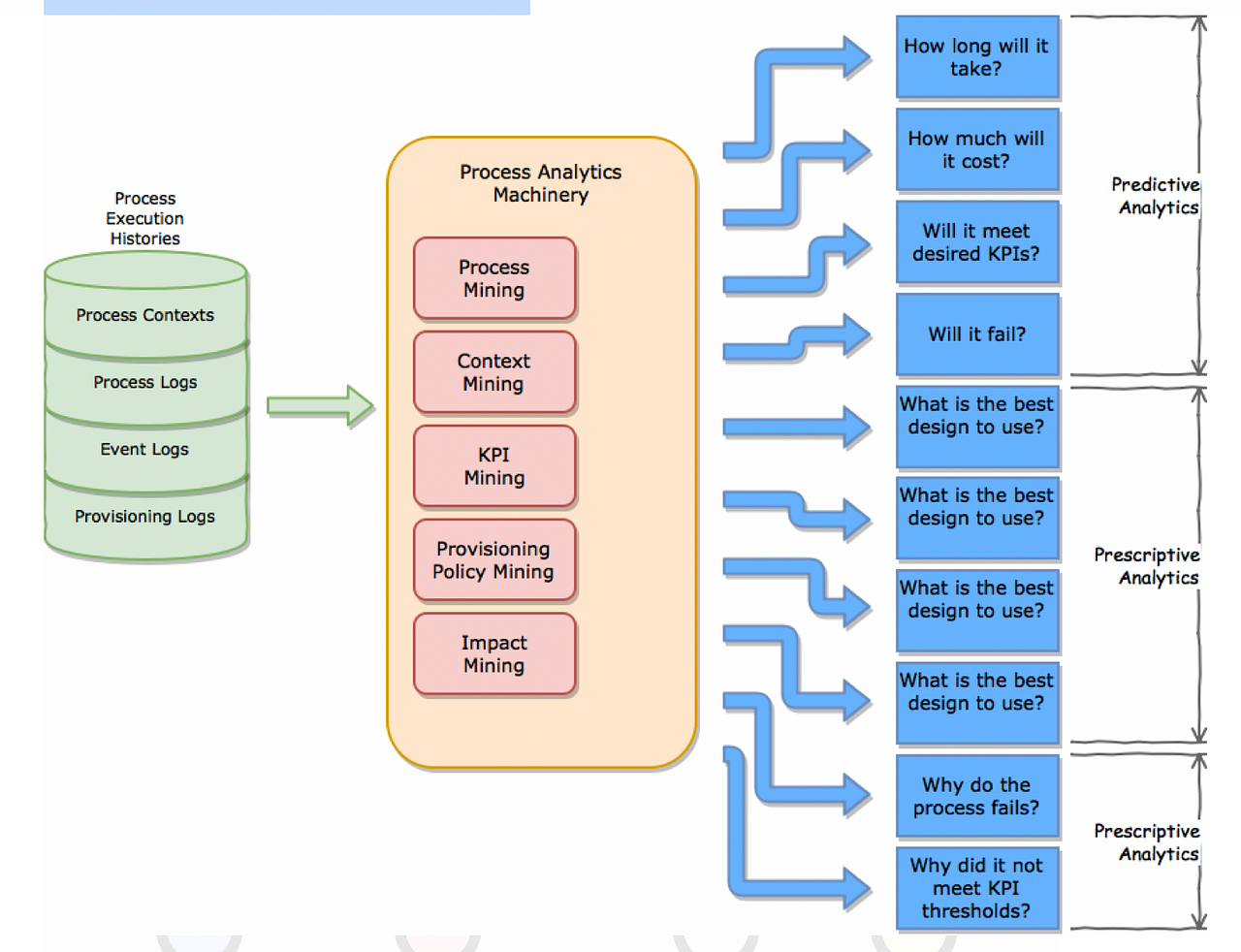

Types of Relevant Organisational Data:

- Process Logs: These record process tasks performed, together with a time-stamp, and an indication of the resource (an employee, a machine etc.) used to perform the task.

- Event Logs: These record critical events (such as state transitions of objects impacted by a process) together with the time when these occurred.

- Provisioning Logs: These record what people or machines were deployed to perform process tasks in greater detail;.

- Process contexts: These record a description of a process’ operating environment. The time-stamps associated with a process can used to access sales data current at that time, financial market sentiment (via publicly available data) or the political/economic context (via open news sources).

The process analytics machinery includes the following 5 components:

- Process mining: This is used to learn process designs from past process logs. Recently, process mining emerged as a new scientific discipline on the interface between process models and event data. On the one hand, conventional Business Process Management (BPM) and Workflow Management (WfM) approaches and tools are mostly model-driven with little consideration for event data. On the other hand, Data Mining (DM), Business Intelligence (BI), and Machine Learning (ML) focus on data without considering end-to-end process models. Process mining aims to bridge the gap between BPM and WfM on the one hand and DM, BI, and ML on the other hand. Here, the challenge is to turn torrents of event data (“Big Data”) into valuable insights related to process performance and compliance.

- Context mining: This module mines the context associated with a process or process task along the lines described above.

- KPI mining: This module is used to learn the expected KPI (or Quality-of-Service) performance of a process or process task, given that there is often considerable variability in these measures.

- Provisioning policy mining: This module helps learn the provisioning policies (rules for allocating resources to tasks) that are associated with the best process performance.

- Impact mining: This module is used to mine process impact (i.e., the changes to the environment achieved by the execution of a process) — usually from process logs and event logs.

The key predictive analytics insights concern the time, cost and other KPIs of process designs that are about to be executed or currently executing process instances. These can also be used to predict process failure (or exceptional terminations).

- The key prescriptive analytics insights are used to decide what process design to use, how to complete (i.e., a suffix of a task sequence) a partially completed process instance, the best resources to allocate to process tasks (either for a process design about to be executed or for a partially completed process instance) and the best provisioning policies.

- Diagnostic analytics can help explain why a process failed, or why it failed to meet KPI targets.

Organisations can drive business value by:

- Mapping their processes (both manually and leveraging process discovery tools). i.e mapping their strategy-decision-data landscape, to identify low-hanging fruit for decision automation

- Putting in place a process analytics solution for the critical decisions identified in step 2, using a combination of off-the-shelf tools

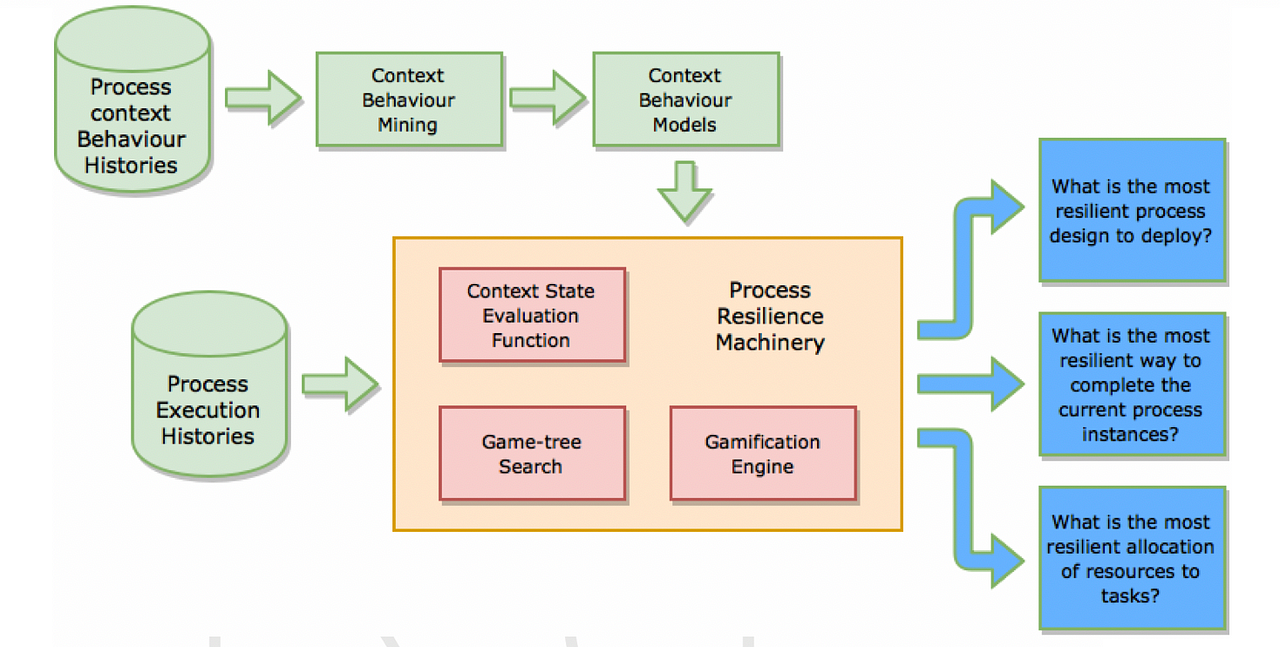

- Adding flexibility/resilience to process execution machinery (to cope with Covid like events)

- Putting in place an over-arching process governance framework.