AlphaGo is the first computer program to defeat a professional human Go player, the first to defeat a Go world champion, and is arguably the strongest Go player in history. This is one the major feats of AI research in the last decade. Go originated in China over 3,000 years ago. Winning this board game requires multiple layers of strategic thinking. The program developed by deep mind innovates on multiple fronts. For example combination of self-play and supervised learning from human expert games allows it to train on a large number of examples. Similarly, they introduced a search algorithm that combines Monte Carlo simulation with value and policy networks.

Planning in AI:

Basic planning problem refers to the problem of finding optimum sequences of actions leading from initial state to the goal state where states correspond to possible worlds. A planning graph can be constructed incrementally by defining: a initial state, a goal state and a set of allowed actions in each state. We can further assume that for agents that operate in real world, the goal of planner is to achieve a particular world state described logically, assuming that world is affected by only the actions of the planner\cite{russell2016artificial}.

When it comes to developing solutions for Planning Problems there exist several challenges. For starters there is uncertainty involved especially if the environment is stochastic or if there are adversaries present who are actively trying to impede the progress of the given agent. Solutions to planning problems don’t generally come with guarantees for achieving goal state if we follow sequence of actions. Furthermore, they must employ effective approaches(such as divide and conquer) to tackle the problem combinatorial explosion. In a given domain n propositions could lead to $2{^n}$ states making it PSPACE hard. There exist several approaches for find solutions to planning problems. Popular ones include GRAPHPLAN, SATPLAN, and FF. These approaches employ useful heuristics and help tackle the representational and combinatorial aspects associated with the planning problems.

Planning graphs algorithms such as GRAPHPLAN record mutexes to pinpoint the points of hard interactions. In other approaches we tranform planning problem as Boolean satisfiability problem or as a constraint satisfaction problem. We can then perform search for example to prove an existence of a solution or search in the space of ordered plans to find a model(described by assigning truth values) that satisfy the constraints defined by initial state, Goal state and domain axioms. SATPLAN is one such approach which uses the general CNF form to construct a range of mutex relations similar to GRAPHPLAN without defining a specific data structure.

Planning systems have a lot of industry applications. For example in business domains agents can have multiple competing objectives with never-ending processes thus making it really hard to define a termination criteria/state. e.g A telecom company would like to first achieve the state of being the market leader and then maintain it. It could also have multiple goals where it would try to maximise revenue and minimise investment costs all. During this scenario the market could behave in unpredictable ways and making the prediction of exogenous events hard.

Conditional Planning:

Conditional Planning is needed when the environment is non-deterministic and the successor states cannot be determined by the actions of the agent. In this situtation we need a mechanism to deal with uncertainty in order to achieve our goals. Another important aspect is the observability of the environment. We start with fully observable case which is bit simpler compared to other scenarios. The planning solutions in this case are similar to the approaches discussed in the previous sections. i.e we could find plans by doing a similar kind of state space search as we did in the case of deterministic planning. But to cater for non-determinisim we use a more general notion of plan representation by introducing branches(and loops). Plans are directed graphs with nodes of degree 1 labeled with operators and edges from nodes of degree ≥ 2 labeled with formulae.

To develop solutions in these senarios, We can start by modifying the MINIMAX algorithms such that we replace the Max, Min Nodes to represent our state space as a graph consisting of OR and AND Nodes. Such graph provides us with a way to deal with contingencies where we can follow another new route based on a certain condition becoming true. The agent thus makes decisions by checking the state of the environment and checking conditional steps to make an action choice.

Another scenario would be a case where environment is partially observable this making modelling harder because the agent has limited information about the actual state of the world.

Minimax Tree Search

In AI games usually refer to a multi-agent environment of a special kind which may involve two or more players that for example take turns and operate in environments which maybe either deterministic or non-deterministic. On a higher level we can imagine a competitive environment where various agents have goals that are in conflict. Agents must come up with a sequence of actions that when executed lead to realization of their goals but they must also model or take into account the future consequences of their adversaries actions and the outcomes of those actions. Search in this case is the process of finding the action sequence set required to reach the goal state.

The unpredictability of actions of other agents in the environment makes this setting particularly interesting and challenging. Many of them are hard problems because the average branching factor of game tree is quite high. e.g Chess can have $35^{100}$ or $10^{154}$ nodes with a lot of repeated nodes, making the problem computationally intractable. To make smart moves in a limited time, we then need to make use of clever heuristics for search algorithms that traverse the game tree. The solutions to such problems have potential applications in various domains such as business process analytics where we can tackle problems involving complex decision around deployment of business strategies.

A game tree has nodes representing game states and edges as moves. We construct the game tree by using the actions and results function. Various algorithms and techniques have been proposed to tackle Adversarial Search problems and make optimal decisions [1]. We assume that agents performing this search start from a known state and operate in a static, fully observable environment and expect the search function to return sequence of actions leading to the goal state.

During the game play each of the agent starts from a well defined initial state and is allowed to only take certain actions depending on the state agent is in. Thus defining the game as one that consists of declaring an initial or start state, a so called Action function' which given a current state of agent returns the available actions and secondly a function called Results function' which returns the results of actions that the agent has executed from a particular state. We also require a `Terminal Test' function that checks if the goal state has reached and as we’ll see later on, we may also chose to use a depth-cut off to limit the search(based on the available compute budget) to tackle the problem of high branching factor. Lastly we need a utility function or an evaluation function which returns a numeric value depending on the goodness of a Leaf nodes or pseudo-leaf node respectively.

In this section we will take a look at workings of MINIMAX search and Monte-Carlo search which is more popular these days due to success of AlphaGo. We will review them in the next sections, discuss how pruning can be highly beneficial for ignoring parts of the game tree that are don’t contribute to smart decision making. Furthermore to make the problem more tractable, instead of doing uninformed search we must deploy clever heuristics that allow us to explore to a depth based on available compute budget. We will conclude by making general comment comparing the effectiveness of these algorithms.

Minimax Tree Search

MINIMAX Search assumes two agents or players that have opposing goals. For a given game state each agent should take actions such that it maximizes the chances of success(achieving goal state) from the perspective of that particular player. We start by defining a utility function which will be used to evaluate a given state. It must capture the notion of winning or loosing based on the game rules or domain we are operating in. The task of each player or party in the game is then to minimize or maximize the utility.

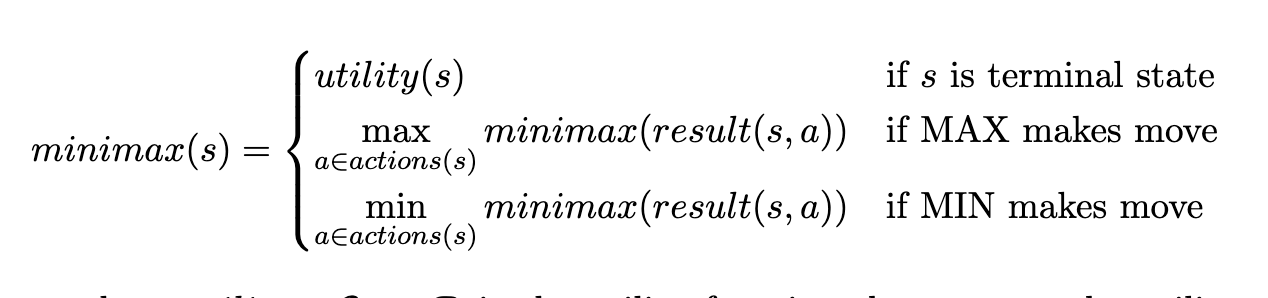

where (utility : \mathcal{S} \rightarrow \mathcal{R}) is the utility function that returns the utility value for a given

state (s), $result : \mathcal{S} \times \mathcal{R} \rightarrow \mathcal{S} $ returns the next state if a particular action is taken by the player, and (action : \mathcal{S} \rightarrow \mathcal{A^{*}}) returns a set of legal actions that are available to the player at the given game state (s). Once we know the minimax value, the optimal choice for a player in a certain state can be determined by simply selecting the action that leads to a state with the highest minimax value.

The standard MiniMax Algorithm is shown in figure 1. It performs a recursive search of the game tree until it reaches the leaf nodes. The leaf nodes are evaluated by using a utility function. It then backtracks evaluating nodes on each level and returning the min or the max value respectively. This Depth-first exploration is compute intensive. The time complexity of the minimax algorithm is $\mathcal{O}(n^{m})$ and the space complexity is $\mathcal{O}(nm)$ where (n) represents the branching factor and and (m) is the depth of the tree. For many practical applications this time cost is impractical. For real world applications applications we explore the tree to a certain predefined cut-off depth (n) where we compute the the minimax value by applying an evaluation function to the so called pseudo-leaf nodes. This reduces the time it takes to search the tree and for many games it can return effective decision recommendations. Coming up with an effective evaluation function however is tricky business and again depends on the domain we are operating in. Monte-Carlo Sampling which we will discuss in the next section can be effective alternative in scenarios the game rules or domain requires complex evaluation function.

Another approach which allows us to deal with the exponential depth problem is to apply a technique called alpha-beta pruning which cuts down the search by pruning away branches that have no effect on the final results of the search.

Let $\alpha$ represent best choice(or highest minimax value) for the max player and $\beta$ represent the best choice(or the lowest minimax value) for the min player. In pruning we don’t explore the nodes when the current node is ‘worse’ than the current $\alpha$ or $\beta$. At that point the process of recursion is terminated and search is moved to unexplored nodes (described in algorithm below)