Process analytic approaches allow organizations to support the practice of Business Process Management and continuous improvement by leveraging all process-related data to identify performance bottlenecks, reduce costs, extract insights and optimize the utilisation of available resources. For a long time process analytics was an academic discipline built around the field of business process management(BPM). But now the industry is starting to take notice, tools are being built and process mining solutions are being deployed that leverage event logs generated by the organizations information systems in order to discover and improve processes that are being executed on the ground.

Developing process analytic capabilities enable analytic-driven decision making and can support organizations in following ways:

- Assisting organizations in understanding process behaviour captured in process execution logs.

- Identification of performance bottlenecks, optimizing the utilization of resource allocation, and uncovering root-causes of undesired process behaviour/outcomes.

- Supporting process stakeholders and process users in the execution of processes by making accurate process behaviour forecasts for running cases

- Enabling organisations to take a analytic-driven decision making approach by providing decision support to process users and knowledge workers

- Supporting the practice of risk management by assisting in early identification and possible mitigation of undesired effects

In this post, we will try to understand the fundamentals of process analytics.

Event logs:

When a business process instance is executed, its execution trace is usually recorded as an event log by the information systems deployed inside an organization. An event log is a sequence of events, naturally ordered by the associating timestamps. It can be characterized by multiple descriptors, including an activity, a resource, and a timestamp. In most scenarios, we can assume that the activity is discrete and drawn from a finite set. Resources associated with an event can be either continuous (e.g., time to complete a sub-task), or discrete (e.g., type of skills needed).

In order to develop process analytic capabilities for a given organization, it is crucial to collect relevant data in a manner that captures for each executed process instance, the ordering of activities (events), associated resources, and their outcomes. We can also view this past process execution data as representing either positive (value-adding) outcomes or negative (non-optimal) outcomes. This Process execution data once collected will contain hidden insights and actionable knowledge that are of considerable business value.

Given process logs as input, the process analytic machinery can be developed which will generate predictive (what is the likely outcome?), prescriptive (what should be done next?) and diagnostic (why did things turn out the way they did?) insights to support (and provide an evidence base for) effective decisions making.

Process Discovery

Understanding the properties of ‘current deployed process’ (whose execution trace is available), is critical to knowing whether it is worth investing in improvements, where performance problems exist, and how much variation there is in the process across the instances and what are the root-causes. In order to develop process analytic capabilities for any organization, it is crucial to collect relevant data in a manner which captures the ordering of activities (events), associated resources and their associated outcomes . We can also view this past process execution data as representing either positive (value-adding) outcomes or negative (non-optimal) outcomes. This Process execution data once collected will contain hidden insights and actionable knowledge that are of considerable business value. Implementing a strategy that will allow any given organization to develop process analytics capabilities would also involve experimenting with various methods, tools and techniques. This will allow us as an organization to understand the behaviour of the deployed processes, monitor currently running process instances, predict the future behavior of those instances and provide better support for operational decision-making across the organization. Overall, Process analytics can also be viewed as an organisational capability that will enable organizations to stay competitive by better understanding its internal processes and identifying areas of improvement.

Not long ago Davenport and Spanyi wrote about process mining hailing it as a capability that can 'revitalize process management in firms'[1]. The first application of process mining is process discovery where given an event log a process mining algorithm will produce a process model (explaining the behavior recorded in the log) without utilizing any a-priori information.

There exist various process discovery algorithms which can be employed to automatically learn process models from raw event data. The most popular algorithms used for process discovery are:

- Alpha Miner

- Alpha+, Alpha++, Alpha#

- Fuzzy miner

- Heuristic miner

- Multi-phase miner

- Genetic process mining

Process Mining helps organizations to recognize and understand the ground realities of processes that are being followed inside an organization, thus giving them the opportunity to improve or redesign them. Process Mining is possible because organizations routinely log their data containing events that occurred during an execution of a process. Events may refer to any of the activities that are carried out by a typical organizations to realize their business goals. It does so by utilizing the event log which contains information about start and completion of various tasks executed by resources at a particular time, allowing organizations to correlate recorded event data and extract process models similar to the ones designed by business analysts.

These algorithms and techniques allow us to go beyond the traditional analysis and approaches of process management. Overall, Process Mining deals with the problem of business process discovery, business process monitoring and identifying bottlenecks that allow organizations to streamline their existing processes.

Process Conformance and Enhancement:

Process mining approaches can also be used to ensure conformance(which is useful during process audits) and detect deviations in past executions. Therefore, second type of process mining is conformance, used to check process conformance where we compare an existing process model with an event log of same process model. The idea here is to check if the actual process model(as recorded in the event log) aligns with the initially designed and deployed process model. The third type of process mining is enhancement, which is used to enhance or extend an existing process model by using information recorded in the event log. For example by using timestamps and frequencies, we can identify bottlenecks, diagnose performance related problems and analyze throughput times. This helps us explore different process redesign and control various strategies.

Predictive Process Monitoring:

Apart from mining, event data can also be leveraged to build machine learning models that perform various process related predictions. Predictive Process Monitoring considers both past execution and current instance data to forecast the behaviour and various properties of process instances. It enables context-aware process execution by monitoring processes and predicting the likelihood of various process outcomes. Predictive Monitoring methods can provide run-time operational support by continuously monitoring a running case instance and presenting a real-time performance overview. Such techniques also allow early identification of process variants (from the desired process design/outcome), allowing the process users to course-correct by taking pre-emptive measures.

Overall, predictive techniques are an effective decision support tool for continuously monitoring process progress and reducing the overall risk associated with negative outcomes.

Predictive Monitoring methods can provide run-time operational support by continuously monitoring a running case instance and presenting a real-time performance overview. It allows early identification of process variants (from the desired process design/outcome), allowing the process users to course-correct by taking preemptive measures. Few examples of Predictive Monitoring tasks include:

- Develop predictive monitoring capabilities where for example we predict the future behavior of those instances and provide better support for operational decision-making across the organization. For example:

- Predict the cycle time of a given process instance ?

- Predict the total remaining time to completion for a partially executed process instance? There are several Time-related Predictions †asks such as Predicting the remaining cycle time, completion time and experiment duration, predicting delayed process executions of running instances and so on.

- Estimate the likely cost that will be incurred in executing the remainder of the process?

- Predict if the running process instance will meet all its performance targets? Predicting the performance outcome of an incomplete case, based on the given (partial) trace means going beyond just simple yield predictions and considering a number of other factors/KPIs that are of interest to us.

Variant Analysis:

Business process variant (also known as deviance analysis or drift detection) refers to process instances that maybe deviate from the desired course of execution, resulting in an unexpected or unplanned outcome. Variations may occur due to contextual/environmental factors, human factors or because of explicit decisions made by process participants. In a given process log, process variants are a subset of instances that violate the behavior prescribed by the model and can distinguished based on a certain characteristics they are correlated with. e.g. representing violation compliance rules or missing set performance targets. In deviance mining, we are interested in identifying various variants of the processes that may exist and diagnosing the root-causes of process variations by analyzing or comparing two or more event logs. i.e Given a set of event logs of two or more process variants, how can we identify and explain the differences among these variants?. Understanding the factors leading to variation can help managers make better decisions and improve overall process performance.

Case variant/outcome prediction aims at predicting process instances that will end up in an undesirable state (measured as likelihood and severity of fault occurrence or violation of compliance rules). Before training, historic data instances can be labelled as normal or deviant and then process related risks can be classified using any of the traditional machine learning techniques. The case outcomes can be assessed primarily by first checking if the process has met its 'hard goals' and then soft goals (determined by KPIs such as time quality, cost etc.)

Similarly, in compliance monitoring, techniques are aimed at preventing compliance violations by monitoring ongoing executions of a process and checking if they comply with respect to certain business constraints.

Prescriptive Analytics:

Introduction: Flexible execution of business process instances entails multiple critical decisions, involving various actors and objects, taken to achieve better process outcomes. These decisions are of variable nature and context dependent. e.g resource allocation, selection of appropriate paths in Control-flow, and decisions embedded in process activities that have an affect Business processes outcomes. Sub-optimal decisions during process execution, such as picking the wrong execution path, can lead to cost overruns and missed deadlines. These decisions therefore require careful attention, with a view to maintaining and improving business process performance. The ability to guide and automate decision making, therefore, is crucial to maintaining and improving business process performance

In Prescriptive Analytics we are interested in developing capabilities that allow Process Decision Support and Automated real-time Planning. Specifically, as part of decision-support tool we may solve one or more of the following problems, leveraging historic and current process data for providing intelligent assistance to process users, and in guiding process-related decisions

- Recommend the best suffix for a task sequence, which, if executed, will lead to a desired outcomes or desired performance characteristics? or identify a process path that would yield best performance at a given context.

- Provide support to assist process users and knowledge workers. e.g. by generating optimal action recommendations for a process instance.

- Provide support for Risk-aware Process Management. e.g. by early identification of process associated risks and generating recommendations for corrective actions that can help avoid a predicted metric deviation.

- Provide support for Resource Management. e.g. assess the suitability of a resource in executing a certain task and support resource allocation decisions by suggesting optimal work assignment policies.

- Provide strategic support for robust process execution and for developing robust strategic plans in adversarial settings.

Implementation Strategy and First Steps

A Process Management System (PMS) is a software system designed to coordinate business processes using explicit process representations known as process models. These models serve as the primary means of guiding process enactment within a PMS by providing a clear depiction of process knowledge. There are three key dimensions in the development of process models:

- Control-Flow Perspective: This dimension focuses on the structure of a process, delineating tasks (which are atomic work units representing activities) and their relationships. These relationships are typically depicted using routing constructs such as sequences, parallel branches, and alternative branches.

- Data Perspective: Here, the emphasis lies on describing the data elements involved in the process—those that are consumed, produced, and exchanged during its execution.

- Resource Perspective: This dimension pertains to the operational and organizational context necessary for process execution. It outlines the resources involved, including people, systems, and services capable of executing tasks. Additionally, it considers the capabilities of these resources, encompassing qualifications or skills relevant for task assignment and execution.

By considering these dimensions, process modeling activities within a PMS provide a comprehensive framework for managing and optimizing business processes.

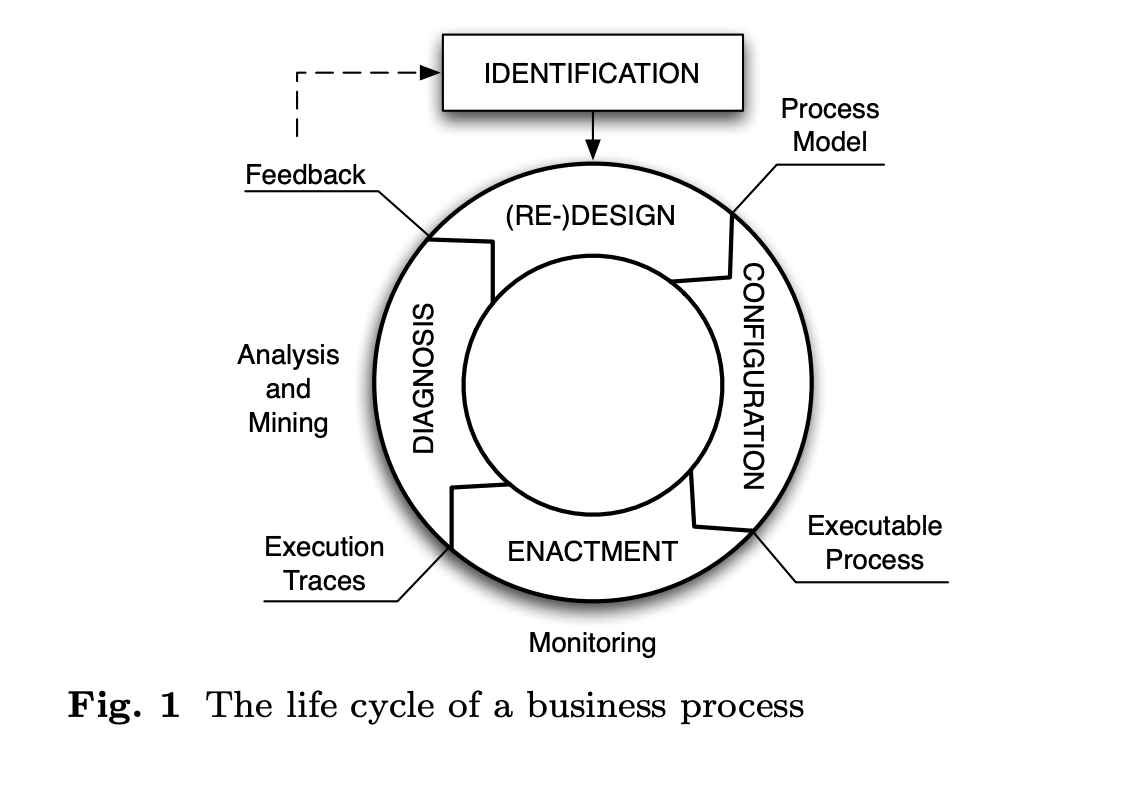

Business Process Management LifeCycle

BPM lifecycle can be defined as "The entry point to the cycle is the design and analysis phase, where the business processes are identified and provided with a formal representation. Newly created models and models from past iterations are verified and validated against current process requirements. In the configuration phase, the systems to use are selected, and the business processes identified before are implemented, tested, and deployed. During the enactment

phase, the processes are operated, and the process execution is monitored and maintained. The resulting execution data is processed by the techniques of the evaluation phase, for example process mining. Using the knowledge gained from one iteration, the next iteration can be started by redesigning the business processes."

Process Identification/(Re)design: In process identification, after requirement analysis, process models are designed using a suitable modeling language. Existing models can be further refined/improved based on insights gathered in the previous cycle.

Process Analysis/diagnosis: In this step, process logs are typically analysed to diagnose problems and identify areas of potential improvement. Process Discovery methods are also useful here, allowing the analyst to reverse engineer the models from recorded process execution logs.

Process Implementation: In Process implementation (also known as process enactment), a process management engine is sometimes used to support the process enactment by instantiating a process instance where tasks are assigned to the relevant resources for execution. A PMS can also manage process routing (control-flow) by considering which tasks are enabled for execution.

Process Monitoring and Controlling: Organizational process are monitored by the various process support and management tools while executed tasks are tracked and recorded to generate execution traces for later analysis.

BPMN:

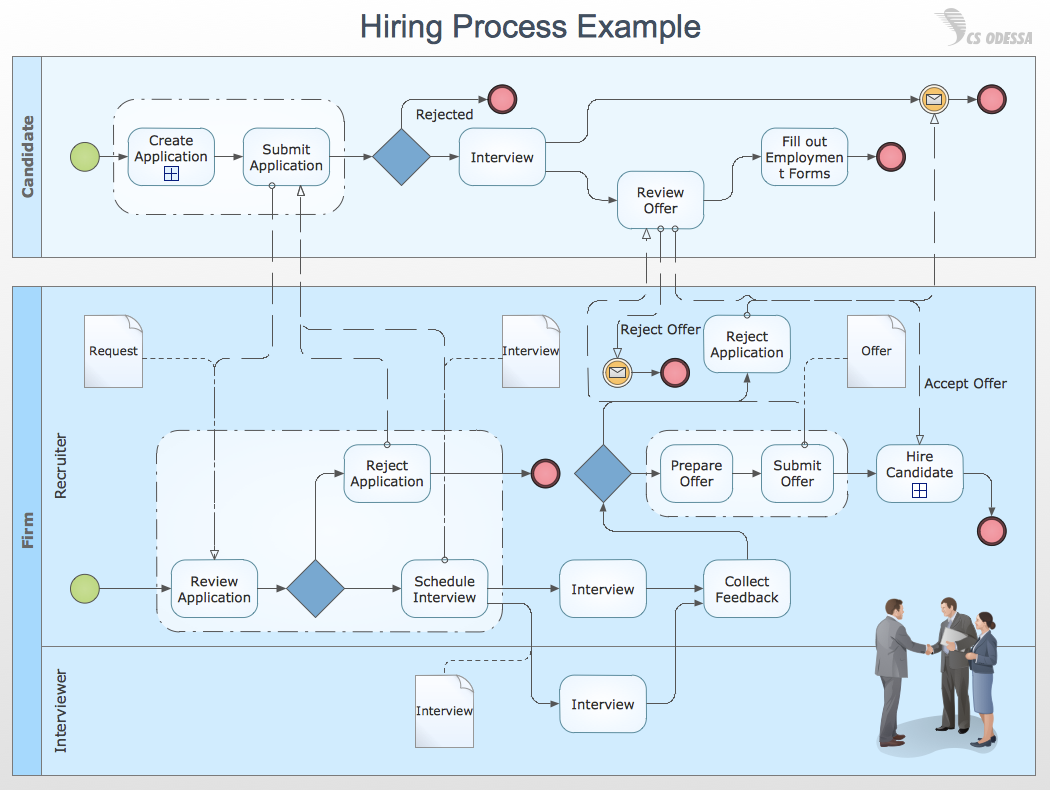

BPMN is a popular modeling notation and is considered an industry standard for modeling Business Processes. The standards included in this notation were developed and agreed by various vendors that provide modeling tools in this space. BPMN provides an intuitive and rich graphical notation for representing business processes. Few key components of BPMN include:

- Events that are triggered by some conditions occurring in the process. Events can be further divided into various sub-types.

- Activities is a generic term used to represent a set of one or more tasks executed by the organizations, which usually results in an effect on the environment. It also includes Gateways which are used to represent the control flow.

- Lastly, we have sequence and message flows which are used to connect various components on the graph.

Process analytic capabilities should be developed in various phases. The initial focus should be on mining the event logs and effects to better understand the process behaviour retrospectively. process execution data will contain interesting insights and actionable knowledge that are of considerable business value in terms of process improvement. These gather insights will provide greater visibility on the on-ground process(vs designed).

In the next phase, organizations should develop predictive monitoring capabilities for monitoring existing deployed processes. Indicators available in event logs can be exploited to make predictions about time-related risks for example. Related Tasks such as predicting the remaining cycle time, completion time and case duration, predicting delayed process executions and deadline violations of running instances can be of considerable interest to many organizations.

Remember, sometimes the data we need is not available. i.e In the short-term we face the problem of data-scarcity. An upfront investment and dedicated effort for data collection could prove to invaluable in the long run. Simulated data is also a good way to kick-start things. For example we can simulate these mined artefacts to obtain the data- streams that would normatively emerge if these artefacts were an accurate reflection of reality (the specific simulation strategy and the type of data obtained would vary depending on the type of artifact).

Conclusion and Summary: Adopting the Process analytics techniques means taking a data-driven approach for identifying performance bottlenecks, reducing costs, extracting insights and optimizing the utilization of available resources. Similarly, predictive techniques are an effective decision support tool for continuously monitoring process progress and reducing the overall risk associated with negative outcomes. Developing a process analytic capability will help in leveraging correlations between how process inputs were administered and the corresponding outcomes (both quantiative and qualitative). Given process logs as input, the process analytic machinery developed as part of the initiateve, will generate predictive (what is the likely outcome?), prescriptive (what should be done next?) and diagnostic (why did things turn out the way they did?) insights to support (and provide an evidence base for) farming decisions.

[1] https://hbr.org/2019/04/what-process-mining-is-and-why-companies-should-do-it