Following are notes complied from various sources in order for me to understand the history of big ideas leading to modern day development of the field of computer science.

Introduction: What is Computer Science?

Knuth (1974b) said that CS is the study of algorithms and surrounding phenomena such as the computers that they run on. CS is the the study both of algorithms and of the computers that execute them.

CS can have multiple definitions:

- natural science of procedure

- “artificial science” (as opposed to the “natural science”) of the phenomena surrounding computers.

- CS is the study of how to represent and process information and of the machines and systems that do this

- said that it is a new kind of science (neither a physical, a biological, nor a social science) of natural and artificial information processes

- Loui (1987) suggested that CS is a new kind of engineering that studies the theory, design, analysis, and implementation of information-processing algorithms

- "CS is “a sort of spectrum . . . with ‘science’ on the one end and ‘engineering’ on the other”

If we want to pick one then we can settle on the following:

CS is the scientific study of what problems can be solved, what tasks can be accomplished, and what features of the world can be understood “computationally”—that is, using the minimal language of a Turing Machine—and then to provide algorithms to show how this can be done efficiently, practically, physically, and ethically.

History: Genesis of Programmable Computing

The Story of Programmable Computing starts in 1815 with Augusta Ada King, Countess of Lovelace. Ada Augusta Lovelace is best known as the first computer programmer in history. She was born in England and her father Lord Byron was a famous poet, while her mother Anne Isabella Byron was a mathematician. She was encouraged to study math by her mother and carried the imagination developed/inherited from her father. At around 1841, her Mathematical talents led her to work with Charles Babbage(a fellow British mathematician).

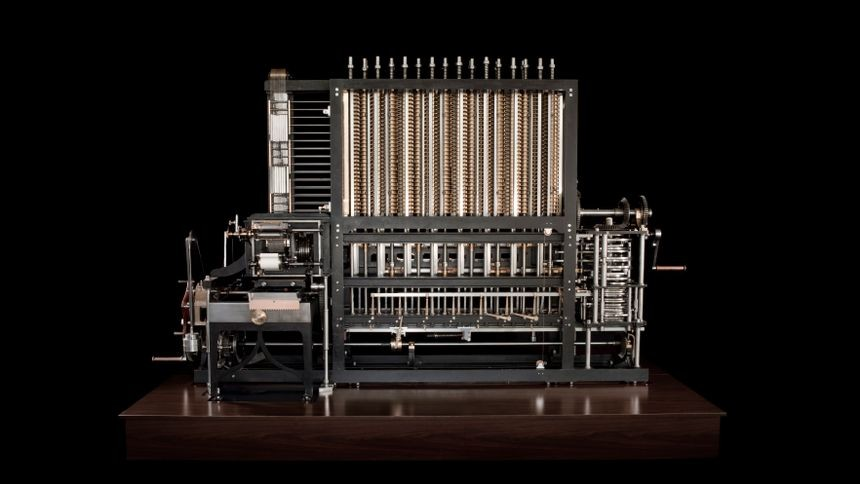

She translated his works on the analytical engine(a highly advanced mechanical calculator often considered a forerunner of the electronic calculating computers of the 20th century) and added her notes. Ada Lovelace's "notes," described the analytical engine( published in Taylor's scientific memoirs in 1843). They contained a ground-breaking description of the possibilities of programming the machine to go beyond number-crunching to "computing" in the wider sense in which we understand the term today.

She wrote about "Analytical Engine” with such clarity and insight that her work became the premier text explaining the process now known as computer programming. She was primarily interested in the ideas behind programming the Analytical Engine. She referred to the "sequences of instructions" as language and thought about and used concepts like arrays, loops, subroutines, and jumps in her programming. With a set of instructions which she composed, she created a forerunner of modern programming languages.

Apparent in the modern computer, her writings discussed the following aspects in full detail:

- Operations necessary for solving mathematical problems

- Distinction between types and operators

- Loop statements

- Arrays

- Subroutines

- Stored programs

A programming language was named after her. i.e The Ada programming language. Which allows for great precision and serious computing power. Ada’s work inspired the Lovelace award, which today honors pioneering women in computer science. Its amazing and still unbelievable how far we have come from the first computer (video below):

Follow excerpts have been taken from the wikipedia article 'History of artificial intelligence':

Foundations of Computer Science and Logic

The evolution of computer science from mathematical logic culminated in the 1930s, with two landmark papers: Claude Shannon’s “A Symbolic Analysis of Switching and Relay Circuits,” and Alan Turing’s “On Computable Numbers, With an Application to the Entscheidungs problem.” In the history of computer science, Shannon and Turing are towering figures, but the importance of the philosophers and logicians who preceded them is frequently overlooked.- How Aristotle Created the Computer By Chris Dixon

In the 17th century, Leibniz, Thomas Hobbes and René Descartes explored the possibility that all rational thought could be made as systematic as algebra or geometry.[16] Hobbes famously wrote in Leviathan: "reason is nothing but reckoning".[17] Leibniz envisioned a universal language of reasoning (his characteristica universalis) which would reduce argumentation to calculation, so that "there would be no more need of disputation between two philosophers than between two accountants. For it would suffice to take their pencils in hand, down to their slates, and to say each other (with a friend as witness, if they liked): Let us calculate.”

Mechanisation of Argumentation:

In the 20th century, the study of mathematical logic provided the essential breakthrough that made artificial intelligence seem plausible. The foundations had been set by such works as Boole's The Laws of Thought and Frege's Begriffs schrift. Building on Frege's system, Russell and Whitehead presented a formal treatment of the foundations of mathematics in their masterpiece, the Principia Mathematica in 1913. Inspired by Russell's success, David Hilbert challenged mathematicians of the 1920s and 30s to answer this fundamental question: "can all of mathematical reasoning be formalized?"His question was answered by Gödel's incompleteness proof, Turing's machine and Church's Lambda calculus.

Possibilities and Limits of Mathematical reasoning

Their answer was surprising in two ways. First, they proved that there were, in fact, limits to what mathematical logic could accomplish. But second (and more important for AI) their work suggested that, within these limits, any form of mathematical reasoning could be mechanized. The Church-Turing thesis implied that a mechanical device, shuffling symbols as simple as 0 and 1, could imitate any conceivable process of mathematical deduction. The key insight was the Turing machine—a simple theoretical construct that captured the essence of abstract symbol manipulation. This invention would inspire a handful of scientists to begin discussing the possibility of thinking machines.

Search:

When access to digital computers became possible in the middle fifties, a few scientists instinctively recognized that a machine that could manipulate numbers could also manipulate symbols and that the manipulation of symbols could well be the essence of human thought. This was a new approach to creating thinking machines.

Reasoning as search: Many early AI programs used the same basic algorithm. To achieve some goal (like winning a game or proving a theorem), they proceeded step by step towards it (by making a move or a deduction) as if searching through a maze, backtracking whenever they reached a dead end. This paradigm was called "reasoning as search". The principal difficulty was that, for many problems, the number of possible paths through the "maze" was simply astronomical (a situation known as a "combinatorial explosion"). Researchers would reduce the search space by using heuristics or "rules of thumb" that would eliminate those paths that were unlikely to lead to a solution. In 1955, Allen Newell and (future Nobel Laureate) Herbert A. Simon created the "Logic Theorist" (with help from J. C. Shaw). The program would eventually prove 38 of the first 52 theorems in Russell and Whitehead's Principia Mathematica, and find new and more elegant proofs for some. Simon said that they had "solved the venerable mind/body problem, explaining how a system composed of matter can have the properties of mind."(This was an early statement of the philosophical position John Searle would later call "Strong AI": that machines can contain minds just as human bodies do.)

Logic

Logic was introduced into AI research as early as 1958, by John McCarthy in his Advice Taker proposal. In 1963, J. Alan Robinson had discovered a simple method to implement deduction on computers, the resolution and unification algorithm. However, straightforward implementations, like those attempted by McCarthy and his students in the late 1960s, were especially intractable: the programs required astronomical numbers of steps to prove simple theorems. A more fruitful approach to logic was developed in the 1970s by Robert Kowalski at the University of Edinburgh, and soon this led to the collaboration with French researchers Alain Colmerauer and Philippe Roussel who created the successful logic programming language Prolog. Prolog uses a subset of logic (Horn clauses, closely related to "rules" and "production rules") that permit tractable computation. Rules would continue to be influential, providing a foundation for Edward Feigenbaum's expert systems and the continuing work by Allen Newell and Herbert A. Simon that would lead to Soar and their unified theories of cognition.

Critics of the logical approach noted, as Dreyfus had, that human beings rarely used logic when they solved problems. Experiments by psychologists like Peter Wason, Eleanor Rosch, Amos Tversky, Daniel Kahneman and others provided proof.[103] McCarthy responded that what people do is irrelevant. He argued that what is really needed are machines that can solve problems—not machines that think as people do.

Gödel’s theorem, which loosely states if you have a reasonably rich system of discrete symbols (the theorem does not refer to Mathematics in spite of the way it is usually presented).

Universal Computing Machine

In the first half of the 20th century, the notion of “computation” was made much more precise than the hitherto informal notion of ``a person writing numbers on a note pad following certain rules.'' Many different models of computation were discovered —Turing machines, lambda calculus, cellular automata, pointer machines, bouncing billiards balls, Conway’s Game of life, etc. and found to be equivalent. More importantly, they are all universal, which means that each is capable of implementing all computations that we can conceive of on any other model. The notion of universality motivated the invention of the standard electronic computer, which is capable of executing all possible programs. The computer’s rapid adoption in society in the subsequent half decade brought computation into every aspect of modern life, and made computational issues important in design, planning, engineering, scientific discovery, and many other human endeavors.

Overtime Theoretical Computer Science Matured. Theoretical CS, concerns itself with the following questions:

What is computation?

What can be computed, and how?

What can be computed efficiently, and how?

What can be computed practically, and how?

What can be computed physically, and how?

Key Insights were as follows:

- A (programmable) computer is a physically plausible implementation of anything logically equivalent to a universal Turing machine.

- An automaton (plural: automata) is a mathematical model of a computing device.

- Turing machines are an abstraction of computers with unbounded resources.

- Finite automata are an abstraction of computers with finite resource constraints.

- DFAs are the simplest type of automaton

minimal language can be described by four great insights of CS:

The representational insight: Only two nouns are needed to express any algorithm.

All the information about any computable problem can be represented using only two nouns: ‘0’ and ‘1’ Up until that time [that is, the time of publication of Shannon’s “Mathematical Theory of Communication” (Shannon, 1948)], everyone thought that communication was involved in trying to find ways of communicating written language, spoken language, pictures, video, and all of these different things— that all of these would require different ways of communicating. Claude said no, you can turn all of them into binary digits. And then you can find ways of communicating the binary digits. (Robert Gallager, quoted in Soni and Goodman 2017)

The processing insight: Only three verbs are needed.

The structural insight: Only three rules of grammar are needed.

A “closure” insight: Nothing else is needed. This is the import of the ChurchTuring Computability Thesis that anything logically equivalent to a Turing Machine (or the lambda calculus, or recursive functions, or . . . ) suffices for computation.

And there is a fifth insight that links this abstract language to computers:

The implementation insight: Algorithms can be carried out by physical devices

Complexity Theory

Computational complexity theory is concerned with how much

computational resources are required to solve a given task. The questions it studies include the following:

1. Why are some problems harder to solve than others? {Computability Theory}

2. Can algorithms use randomness (i.e., coin tossing) to speed up computation?

3. Many computational tasks involve searching for a solution across a vast space of possibilities (for example, the aforementioned tasks of solving linear equations and finding a maximal set of invitees to a dinner party). Is there an efficient search algorithm for all such tasks, or do some tasks inherently require an exhaustive search? this is the famous “P vs. NP” question that is considered the central problem of complexity theory. Computational search tasks of the form above arise in a host of disciplines including the life sciences, social sciences and operations research, and computational complexity has provided strong evidence that many of these tasks are inherently intractable.

4. Can hard problems be solved quicker if we allow the algorithms to err on a small number of inputs, or to only compute an approximate solution?

5. Is there any use for computationally hard problems? For example, can we use them to construct secret codes that are unbreakable? (at least in the universe’s lifetime).

6. Can we generate mathematical proofs automatically? Can we check a mathematical proof by only reading 3 probabilistically chosen letters from it?

At roughly 40 years of age, Complexity theory is still an infant science. Thus we still do not have complete answers for any of these questions. Furthermore, many major insights on these questions were only found in recent years.

References

2. Why Philosophers Should Care About Computational Complexity:https://arxiv.org/pdf/1108.1791.pdf

Kurt Godel: https://www.youtube.com/watch?v=i2KP1vWkQ6Y

Calude Shanon